Introduction

Algorithms have this arcane aura, like they’re spells software engineers cast to gather data, deliver search results, and recommend videos. In reality, like most computer science jargon, “algorithm” is a strange word for a simple concept. An algorithm is just any systematic way of making a decision. When you cook according to a recipe or navigate using a map, you’re executing an algorithm.

Algorithms in computer science are, however, distinguished by proofs. There’s no way to prove a particular recipe is the best, and no point if there was – people have different tastes. But we can prove that mergesort, or any other n-log-n sorting algorithm, is strictly optimal. We know which algorithms are best and why. Formal algorithms have a clarity that real decision contexts lack.

Looking to algorithms for inspiration on how to improve my decisions in both Magic and life in general has let me add some of that clarity to my own processes. I touched on this perspective with epsilon-greedy exploration in my Pro Tour Finals report. In this article, I’d like to introduce some other algorithms I’ve personally found insightful.

Imagine the Worst

Even the most sophisticated modern game AI use variations on a basic decision-making algorithm called minimax. In minimax, instead of trying to deduce what the other players in a game will do, or even figure out what’s most likely, the AI simply assumes that every time an opponent has a decision, they will cause the worst possible outcome. Despite its simplicity, this heuristic is effective and resilient.

This framework is helpful in all kinds of Magic contexts, but it’s by far the most useful in combat. When there’s 5 creatures on both sides of the battlefield, fully mapping out what happens when you alpha is nigh impossible with 120 combinations of just single-blocks, let alone double-blocks and triple-blocks. Instead of worrying about bluffs or rebluffs or tendencies, just work out the worst possible outcome assuming your opponent has perfect information about your hand. If you have a trick, they know about it and will play around it perfectly. You’ll often find that the worst case scenario isn’t so bad, and then you can stop thinking right there and attack with confidence. Whatever actually happens will be better for you than what you worked out. If the worst-case scenario is bad, of course, leave the team at home.

When your opponent then does something suboptimal after you attack, you can pause and consider why. Your opponent played into your trick? Maybe they have a removal spell, or want to force your trick so you don’t have access to it for a more important exchange on a later turn. Of course, sometimes your opponent doesn’t read you for a trick or simply can’t afford to play around it, so don’t go too far with this reasoning. But the better your opponent, the more suspicious any suboptimal decisions become. And because you’ve identified the true worst-case scenario, you can immediately identify when these situations come up.

Once you’re comfortable quickly evaluating worst-case scenarios, it’ll feel a bit like predicting the future. Good players will do exactly what you expect them to, and you can scale back and work out what happens if you attack with fewer creatures. You’ll recognize how players expect you to block and if those blocks look favorable for you, work out what holdings would let them win combat.

Backpropagation

The other secret sauce of modern game AI is Monte Carlo tree search, usually abbreviated MCTS. The basic concept of MCTS is that the computer takes random actions on both sides until it finds a terminal game state. It generates a game tree by repeating this process thousands or millions of times, from which it finds the best play. There are many refinements on MCTS, with neural networks and reduced action spaces and depth limitations, but AI up to AlphaGo use versions of it.

Of course, simulating thousands of games is a unique strength of computers. But MCTS has a key practical insight: find the winning terminal states and move backwards. In a game of Magic, instead of looking at what will happen this turn or next turn or even 4 turns in the future, just figure out what a game that you win looks like. Sometimes it’ll involve drawing some key cards or your opponent making a mistake, but working toward a distinct game state is key.

One of my favorite quotes is from General George Patton in World War II.

Image Copyright: Wikipedia

A good plan, violently executed now, is better than the perfect plan next week.

―George S. Patton

And no plan at all is, of course, a non-starter. When you start out thinking this way, you won’t pick the best plans, ie. the most likely terminal states. But by having a plan and sticking to it, you’ll get a sense of how things can go right and wrong and what makes some plans better than others. Keep at it, and you’ll develop an intuition for good and bad plans. And you’ll find that executing even a bad plan well is more effective than looking at the game one turn at a time.

Water Works

To evaluate any game mathematically, you need a utility function. For a lot of games, the utility function can be simple: did you win? If yes, 1; if no, 0. But for complicated games like chess, go, or Magic, you need more sophistication. A big part of AlphaGo, for example, was a neural network trained to score game states. The basic AlphaGo decision loop was to use MCTS for 20 moves, pass the resulting game states to the neural network, and then minimax the resulting tree.

For complicated games, scoring is mostly intuition. Even neural networks are just algorithmic attempts to formalize intuition. However, we do have some observable measures in Magic. For example, taking our first footsteps into competitive Magic, we’re all taught that card advantage is king and life is a resource. These heuristics are certainly useful for newer players, but I hate them. You win games of Magic by reducing your opponent’s life total to zero, not by casting the most 《Divination》.

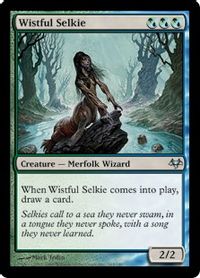

I personally think of Magic in terms of mana, cards, life, and turns. If you cast a 《Dark Confidant》 and I 《Lightning Bolt》 it, then I gained a mana and we traded evenly on cards, life, and turns – a great exchange for me. If you cast a 《Wistful Selkie》 and I 《Lightning Bolt》 it, then I gained 2 mana, you gained a card, and everything else broke even. Who that favors depends on whether cards or mana is the more important resource, but it’s just an exchange of resources.

Of these 4 metrics, mana is almost always the most important. This is why 1-mana cards are so powerful. If you play a 《Noble Hierarch》 on turn 1, outside of corner cases like 《Gut Shot》 and 《Lava Dart》, it’s literally impossible for me to gain a mana advantage answering it. Conversely, 《Lightning Bolt》 will always trade at least evenly on mana and will frequently trade up.

The values of cards and life are highly contextual, but in our Modern age of 《Uro, Titan of Nature’s Wrath》 and 《Expressive Iteration》 and 《Lurrus of the Dream-Den》, cards are rarely a key constraint. But regardless of whether cards or life is situationally more important, you should almost always be happy to trade either for mana.

Turns are by far the most valuable resource, since they represent at minimum a card and 5+ mana, but lines that profit turns come up rarely. When you have access to a line that might gain a full turn, like attacking with more creatures to take a turn off the clock or using a combat trick to end the game immediately, you should almost always take it.

Another useful concept is “key cards”. A key card provides some kind of recurring advantage in a matchup and is usually hard to answer. For example, by every other metric, 《Underworld Connections》 is an unplayable card. You can pay 4 mana and 1 life for 1 card, 5 mana and 2 life for 2 cards, etc. These are all horrible exchanges, up to 4 cards. But there simply aren’t many 4-for-1s in Magic and 《Underworld Connections》 was literally unanswerable in the old Mono-Black Devotion mirrors, which were slow, grindy, and rarely came down to life totals. If you can exchange for a key card at any rate, you’ve profited.

This is by no means an exhaustive system for evaluating Magic, but I hope it provides some insight. And whether you use my scoring system or come up with your own, you need a system.

Fish in the Sea

Imagine you’re hiring an employee. You have 100 applicants, which you’ll interview one at a time. After each interview, you need to make a decision to hire or not. If you pass on someone, they’re gone forever. But if you hire someone, that’s it – the position is filled, and you’ll never know if someone better was waiting. How do you decide?

Coined in ancient, less politically correct times, this set-up is best known as “the secretary problem”. It turns out it has a strictly optimal solution, but the solution doesn’t guarantee hiring the best candidate. With 100 candidates, you’d interview the first 37 candidates and take note of the top contender, without hiring anyone. After 37, you immediately hire anyone who beats the best candidate in the first batch. Because the overall best candidate might be in the first 37 or you might never get to them, this strategy finds the best candidate 37% of the time.

Of course, in real life, you have more flexibility than the rigid theoretical set-up. You could interview all of the candidates, for example, and only respond to the best candidates after hearing from everyone. And even after rejecting someone, if things fall through with your first choice, your second choice might still be open to working for you. Even so, the secretary problem offers some interesting insights.

First, it suggests the value of clearly identifying the pool of available candidates and tracking their performance. For example, if you’re selecting a deck for a tournament, start by identifying how many decks are in the metagame. If there are 20 playable decks, the optimal stopping strategy would be trying 7-8 before even considering a selection. If you pick a deck before trying that many, you’re likely stopping too early. Even if you have success with a deck early on, there’s likely something better waiting still.

Second, the mathematically optimal strategy here only has a 37% chance of success. Life has infinite confounding factors, and more often than not you won’t find the best deck in a format. You succeed in testing by trying enough decks and properly evaluating your results, not by finding the best deck.

Lastly, when you’re making your final choice, weigh it carefully against your prior experience. I’ve often made the mistake of overestimating a deck just because I tried it immediately after testing something truly horrible. But the key comparison is how the deck you’re considering performs compared to the best decks you’ve tried so far, not the last deck you tried.

Conclusion

My favorite algorithmic parable is finding the majority element in a list of integers. You’re guaranteed a majority element exists, ie. that one number represents more than half the list. You can divide-and-conquer to group the list and get a deterministic result, but you’ll never do better than O(n log(n)) runtime that way. But if you pick an element from the list at random and just check whether it’s the majority element, you average O(n), which is significantly faster. After all, more than half the elements in the list are the majority element. It’d be unlucky if you didn’t guess it on your first try.

That’s a lot of jargon, but the takeaway is that the optimal solution is sometimes as simple as picking a number at random and running with it. It’s easy to overthink things, a problem I’m certainly susceptible to. That said, I hope this article offers some insight into what you can learn when you take the opposite approach and let complexity reign.

If you want to read more about game-playing algorithms, you can find the lecture notes from a Stanford course on general game AI here and the AlphaGo paper here.

As always, best of luck.

Allen Wu (Twitter)